Building an Image Content Search System Using Vespa

Using vector databases to search on images databases and personalize experiences

After many years working in the field of search and recommendation systems for text I always wanted to explore other modalities and with the proliferation of social media, e-commerce, and content-sharing platforms like TikTok, images have become crucial in engaging users and delivering relevant content. So I considered building an image content search system with Vespa as a vector database. Here are some thoughts…

Why Vector databases are gaining popularity

In recent years vector databases are exploding in popularity. The reasons are multiple but some of them are game-changing. For example:

- Keyword searches have some limitations when it comes to capturing synonyms and related concepts effectively.

- Efficient Similarity Search: Vector databases allow for efficient similarity searches, where vectors that are close to each other in the vector space are likely to represent similar data items. This is particularly valuable for tasks like content-based recommendation systems, image searches, and others.

- Multimodal Search: With the rise of multimodal data (data that combines multiple types, such as images, text, and video), vector databases offer a way to index and search across these different modalities. This enables complex searches involving multiple data types, like finding images related to a specific text query or vice versa.

Building our system

To start building an image content search system, we need to understand the different components involved in the process. These components work together to process, index, and retrieve images efficiently. Here are the key components:

1. Image Data Collection: The first step is to gather a dataset of images that you want to index and make searchable. For the purpose of this blog, I am going to use https://www.kaggle.com/datasets/itsahmad/indoor-scenes-cvpr-2019. Basically, the database contains 67 Indoor categories and a total of 15620 images. The number of images varies across categories, but there are at least 100 images per category. All images are in jpg format.

2. Data Preprocessing: Image data often requires preprocessing before indexing. This step involves tasks like resizing images to a uniform size, normalizing pixel values, and converting images to a format compatible with the chosen deep-learning model.

3. Feature Extraction: Feature extraction is a critical step in image content search systems. It involves using pre-trained deep learning models (for example convolutional neural networks — CNNs) to extract high-dimensional embeddings from images. These embeddings represent the content of the images in a dense vector space, capturing semantically meaningful information.

4. Vector Database: This is where Vespa comes into play. Vespa acts as the vector database, storing the extracted image embeddings and allowing efficient similarity searches. It is crucial to configure Vespa properly to handle vector-based searches efficiently.

5. Indexing Images: Once the embeddings are generated, they need to be indexed in Vespa’s vector database. The indexing process involves creating a mapping between the image filenames (ids) and their corresponding embeddings, enabling fast and accurate retrieval during search queries.

6. Search API: You need to implement a Search API that communicates with Vespa’s vector database and handles incoming image search queries. The API takes user-provided images, extracts their embeddings using the same feature extraction model used during indexing, and sends the search request to Vespa to find the most similar images.

7. Similarity Search: Vespa’s vector database performs the similarity search by using a distance metric between the query image embedding and the indexed image embeddings. The results are ranked in descending order of similarity, and the top matches are returned to the user.

8. User Interface: The interface allows users to interact with the system, submit queries, and visualize the search results effectively.

The final architecture looks like this:

Embedding service

The objective of the embedding service is to convert raw image data into dense, high-dimensional vectors (embeddings) that effectively capture the semantic information and visual features of each image. These embeddings serve as compact and meaningful representations of the images, enabling efficient similarity searches and content-based retrieval within the image content search system.

I chose gRPC for developing this service mainly because it is high performance. gRPC uses Protocol Buffers (protobuf) as serialization format, which is a binary and efficient data interchange format. This leads to faster data transmission and reduced payload size, making it ideal for transmitting image embeddings, which can be high-dimensional.

For the model, I am using a Mobilenet pre trained on ImageNET. Mainly because I don’t have a GPU and is a model quite fast. This model receives images of size 224x224x3 and produces 1280-dimensional embeddings. So, we need to make sure that all the images are scaled and preprocessed to match the size. Also, in order to send the image to the gRPC service I will transform the image to base64.

class ImageProcedureServicer(embedding_pb2_grpc.ImageProcedureServicer):

def __init__(self):

self.model = tf.keras.applications.MobileNetV2(weights="imagenet", include_top=False, pooling="avg")

def ImageToEmbedding(self, request, context):

b64decoded = base64.b64decode(request.b64image)

imgarr = np.frombuffer(b64decoded, dtype=np.uint8).reshape(1, request.width, request.height, -1)

hyp = self.model.predict(imgarr)

return embedding_pb2.Embedding(embedding=list(tf.math.l2_normalize(hyp[0])))Code for the embedding service on: https://github.com/marescas/indoor-tiktok/blob/main/embedding_service/embedding_service.py

Vector Database: Vespa

Vespa will be the main database for the application. Vespa is an open-source, high-performance, and scalable database developed by Yahoo. It is designed for building real-time, large-scale applications like search, recommendation, and personalization systems. By using Vespa you can search by keyword matching with the classical bm25 index but also with embeddings and Approximate Nearest Neighbour.

In the application, I will create an index with 3 fields:

- An id of type string that allows us to identify an image

- A filename of type string that allows us to identify the path for the image we need to load in the frontend

- An embedding of 1280 dimensions in float 32. Also, I will create an HNSW index to perform ANN

Also, I will create a rank profile that performs a similarity distance between a query embedding and the embedding stored in Vespa. The results will be ranked by this profile.

from vespa.package import ApplicationPackage, Field, FieldSet, HNSW, RankProfile

app_package = ApplicationPackage(name="images", create_query_profile_by_default=False)

app_package.schema.add_fields(

Field(name="id", type="string", indexing=["attribute", "summary"]),

Field(name="filename", type="string", indexing=["summary"]),

# innerproduct works well if we have the embeddings normalized for example with L2 :)

Field(name="embedding", type="tensor<float>(x[1280])", indexing=["index", "attribute", "summary"],

ann=HNSW(

distance_metric="innerproduct",

max_links_per_node=16,

neighbors_to_explore_at_insert=200,

))

)

app_package.schema.add_rank_profile(

RankProfile(name="semantic-similarity", inherits="default", first_phase="closeness(embedding)",

inputs=[("query(query_embedding)", "tensor<float>(x[1280])")]))Also, it is important to notice that the vectors should be normalized to ensure vectors are of unit length (1). This enables the use of inner product distance metric, optimizing computations for the Approximate Nearest Neighbor search. This is accomplished by using an L2 normalization on the embedding service (take a look on the embedding service section).

Code for the indexer in: https://github.com/marescas/indoor-tiktok/blob/main/indexer/indexer.py

Frontend and search API

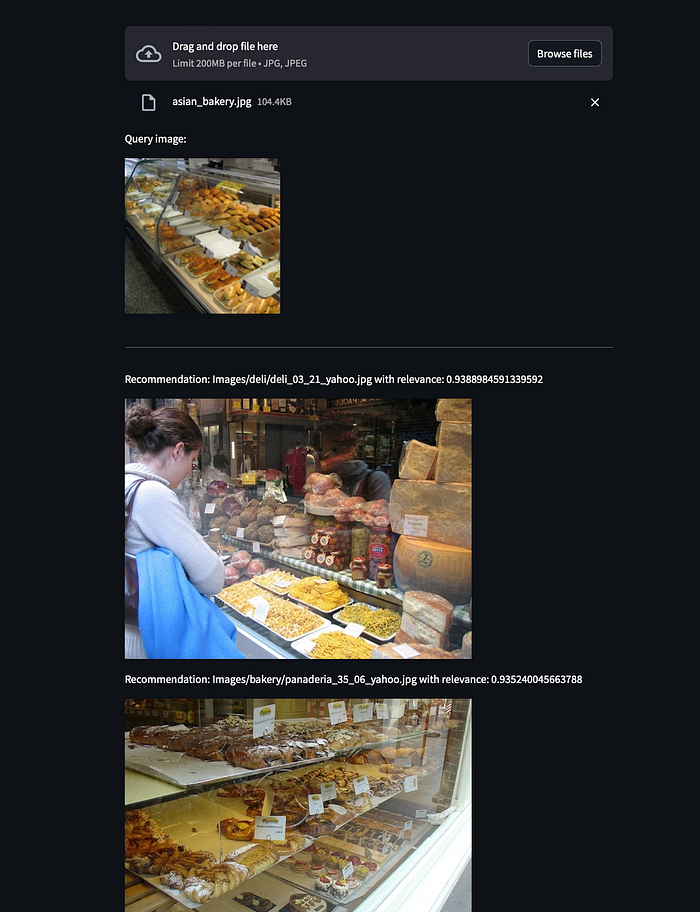

The requirement for the frontend is quite simple. A user needs to upload an image and the app needs to display other images that are similar. For that, as we can see in the image of the architecture for the app, the flow should be:

- User should upload the image on the frontend

- The image is sent to the search API

- The search API transforms the image to an embedding using the embedding service

- The search API search for similar images by sending a query to Vespa with the embedding and computing X closest neighbors

- The results are displayed on the frontend

For simplicity, instead of creating a separate service for the search API, everything is encapsulated on the frontend. The frontend is created with Streamlit and allows all the described functionality.

if uploaded_file is not None:

with Image.open(uploaded_file) as img:

# resize image

frame = img.resize(size=(224, 224))

# encode

data = base64.b64encode(np.array(frame))

image_req = embedding_pb2.B64Image(b64image=data, width=224, height=224)

try:

# get embedding

response = stub.ImageToEmbedding(image_req)

# get similar images with ANN on Vespa

recommendations = vespa_endpoint.query(body={

'yql': (

'select filename from images where ({targetHits:100,approximate:true}nearestNeighbor(embedding,query_embedding)) ;'),

'hits': 10,

'input.query(query_embedding)': list(response.embedding),

'ranking.profile': 'semantic-similarity'

})

rec = recommendations.get_json()

for recommendation in rec["root"]["children"]:

# display recommendations

except Exception as e:

print(f"Error {str(e)}")Frontend code: https://github.com/marescas/indoor-tiktok/blob/main/frontend/frontend.py

And the frontend looks like:

Points of improvement and conclusions

Building an effective image search system requires a careful approach to ensure optimal performance and scalability. While the code presented in this post serves as a valuable starting point, it requires several improvements to be production-ready.

- Implement batch predictions: Currently, the code processes images one by one in the embedding service. To boost indexing speed, we should incorporate batching of image data. This enhancement will take full advantage of GPUs batching capabilities, significantly improving system efficiency.

- Explore better embedding models: While the current model works well on non-GPU machines, exploring alternative models can lead to improved embedding quality. Investing time in evaluating different models will pay off in terms of enhanced search accuracy and overall user satisfaction. Additionally, you have the option of training your model with the data. A common technique in natural language processing involves fine-tuning a BERT model within the specific domain using the Masked Language Modeling (MLM) task. Afterward, employing the SentenceBERT approach, you can further adapt the BERT model to generate high-quality text embeddings.

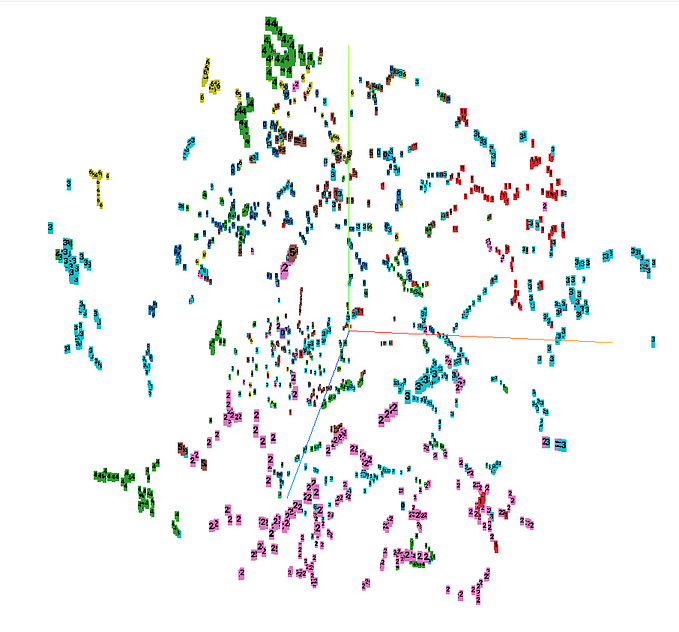

- Dimensionality Reduction Techniques: Storing embeddings of 1280 dimensions can create big challenges with large image databases (Thinking on a dataset of several billions of images…). By employing dimensionality reduction techniques like distillation, we can reduce the number of dimensions to a more manageable size (e.g., 128). Striking the right balance between quality and space requirements is crucial.

- Data Type Optimization: Consider using more space-efficient data types like int8 or bfloat16 instead of float32 for embedding storage. This optimization will lead to significant space savings while losing some embedding quality.

- I am not performing any metric evaluation. Having the labels will allow us to run an evaluation on metrics like MRR to see how the system is going.

I hope this post inspires you to embark on the journey of building image search systems. Happy building!

Full code on: https://github.com/marescas/indoor-tiktok/tree/main

About the author ✍🏻

Marcos Esteve is a ML Engineer. At his work, Marcos works mainly on Natural Language Processing tasks. He is quite interested in multimodality, search tasks, Graph Neural Networks and building data science apps. Contact him on Linkedin or Twitter